Major companies are rapidly now adopting Generative AI and Large Language Models (LLMs), enabling their employees get familiar with the technology & outsourcing some of their mundane tasks – be it drafting emails, summarizing documents, creating presentations or getting excel formulas.

But, ever wondered how can you tailor these models specifically for your company’s needs?

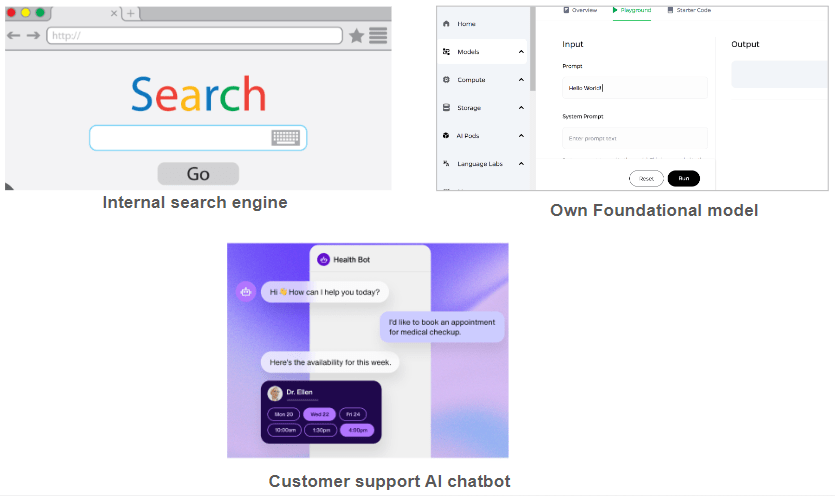

Let’s say we want to customize the model for following applications:

And here comes the concept of RAG & Fine-tuning which customizes the model according to your needs & becomes an intelligent AI assistant.

Which suits your requirement

RAG concept is about connecting the existing static foundational model (which has been trained on generic training dataset) to your external company database. This enables the model to retrieve the relevant data to a question or task and provide them as context for the LLM. But, if you want your model to behave in a certain way or want more than fetching relevant information, Fine-tuning comes into play. While both have their own significance, I’ll start with RAG & its implementation & will cover Fine-tuning in my next article.

Let me give you a few simple RAG use-cases.

Example 1: The HR Policy Assistant

Imagine asking a regular AI chatbot: “What’s our company’s paternity leave policy?”

Regular AI: “I cannot provide specific information about your company’s policies”. Or else the model may hallucinate*.

*Hallucinate: Sometimes, when you query a model & sense that something is off with its output, no matter how confident the model seems to be. Then you later verify the information and discover that it provided inaccurate or completely fabricated data. This phenomenon of LLM is called hallucination.

RAG-enabled AI: “According to the latest HR policy updated last month, employees are eligible for 12 weeks of paid paternity leave. This can be taken within 6 months of the child’s birth or adoption. For more details, you can refer to Section 3.2 of the Employee Handbook in Success Factors.”

Example 2: The Sales Intelligence Tool

Consider a sales representative asking about a client engagement ABC Corp:

Regular AI: “I cannot access specific information about your clients.”

RAG-enabled AI: “Based on our latest CRM data, ABC Corp has been our client for 3 years. Their last purchase was two weeks ago for $50,000. They currently have an open support ticket about system integration. Would you like to see their purchase history or current contract details?”

The implementation for RAG:

One of the commonly adopted way for implementing RAG is through the following steps:

- External Data Preparation: Since external data sources play a significant role in RAG, one of the critical steps is to prepare data compatible with the LLM. The raw files are converted into clean data where all irrelevant data is removed & larger chunks are broken into small & manageable chunks of information.

- Data conversion into embeddings: Now the cleaned small chunks of data have to be converted into vectors. This involves transforming text data into embeddings, which capture the deep meaning of the text. Then these vectors’ are stored in the vector database like Milvus, Chroma DB, Pinecone, Weaviate to name a few (these are more like vector indexing which is used for fast retrieval).

- **In simple words, embedding is the language that an AI model understands. It allows the model to decode any data based on the meaning (properties) of the text, instead of a simple word-to-word comparison. For more details, visit my previous article on embedding https://aipods.co/2024/08/21/embedding-the-language-of-ai-world-2-2/.

- Information Retrieval: Just like how the external data sources data got converted into vectors, the user query will also get converted into a vector. This enables this query vector to search the vector database (step 2 reference) to pull the relevant chunks , lets say top 50k data, from multiple documents. This is done to get an answer based on semantic meaning match via retriever mechanism as shown in the image 1.

- RAG final step: So now you have a question and retrieved relevant chunks (both in the form of vectors) & in order to frame the final answer , both need to be passed to the LLM model to frame the right answer. And along with final answer, you can also fetch the relevant sources (like chunks from relevant documents, links).

Image 1: RAG process flow

On-Paper implementation

There are various existing frameworks & components like Lang-chain, Google-Colab to name a few that can be leveraged to implement RAG over your existing foundational models. For instance, LangChain offers an easy-to-follow implementation. You can explore more details in this link https://python.langchain.com/docs/tutorials/rag/.

Summary

So, the next time you’re thinking of implementing your own search engine or customized LLM that provides real-time, context-specific answers, RAG is the way forward. You don’t need to build your own ChatGPT or any other AI model from scratch after all we’ve plenty of them around including the open source ones. All you need is to build a RAG on top of these models & you create a custom solution tailored to your organization’s needs.

Leave a comment